By: Alex Wall

Imagine you are the driver of a runaway train.

The brakes on your train have failed, but you still have the ability to steer the train from the main track to a single alternate track. You can see the two tracks ahead of you; on the main track there are five workers, on the alternate track there is one. Both tracks are in narrow tunnels so whichever track the train takes, anyone on that track will surely be killed. Which way do you steer the train? Do you let it continue down the main track, killing five, or do you switch it onto the alternate track, killing one?

This is the famous Trolley Problem. First formulated by Philippa Foot in 1967, it has been a staple in ethics and moral philosophy courses ever since. The reason why is clear: however you answer it reveals something about your gut sense of what is right and wrong—what we call moral intuition—and, by thinking through different versions of the problem, it is possible to try to refine those moral intuitions into explicit moral principles.

For example, most people respond to the Trolley Problem by saying that they would steer the train onto the alternate track. Their gut feeling, or moral intuition, is that it is better to pick the option that kills only one person rather than the option that kills five.

If you were to express this moral intuition as a principle, you might say that “five lives are worth more than one”, or that “the needs of the many outweigh the needs of the few”. This sort of utilitarian principle matches what most people report as their gut reaction to the original Trolley Problem.

But what happens when the problem is modified? One famous variation on the Trolley Problem, first offered by Judith Jarvis Thomson in 1976, is as follows:

As before, a trolley is hurtling down a track towards five people. You are on a bridge under which it will pass, and you can stop it by putting something very heavy in front of it. As it happens, there is a very fat man next to you – your only way to stop the trolley is to push him over the bridge and onto the track, killing him to save five. Should you proceed?

Again, most people have a strong gut reaction to this version of the problem, viewing it as clearly wrong to push the fat man onto the tracks. This includes the vast majority of people who said they would switch the train onto the alternate track, sacrificing one in order to save five. In the previous case, their moral intuition lined up with the simple utilitarian principle that you should do whatever will save the most lives. But now, faced with the scenario where they would have to actively throw a person onto the tracks, their moral intuition rejects such a principle.

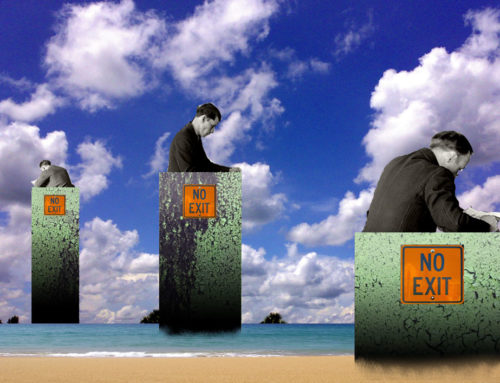

This is the power of the Trolley Problem. What initially seems to be an easy task of turning moral intuitions into moral principles proves to be considerably more difficult as new versions of the problem are introduced and we try to reconcile the differences in our answers.

Now in the age of self-driving cars, we have a fascinating new variation to the Trolley Problem: what if you didn’t get to choose in the moment, but rather the choice was determined by the vehicle? In this case, the choice, insofar as it is still appropriate to use that world, will be made by the programmers of the vehicle. They will have to anticipate all sorts of possible no-win scenarios that their vehicles might find themselves in, and create rules that the vehicles will follow to make their decisions.

But can the clear rules-based language of computer code handle the nuances of ethical problems? Can programmers solve the Trolley Problem using explicit principles rather than vague gut feelings? Or will they find, like so many students in philosophy classes have before them, that their attempts to refine their moral intuitions into principles fail to account for some new situation that comes up?

Self-driving cars give the Trolley Problem a new relevance. Only now the problem isn’t a thought experiment in a classroom, but a steel-and-glass one on the roads.

The “Trolley Problem” reveals more about the weakness of our moral convictions than it presents an actual moral dillemna.

In moral terms, the solutions to the problem are quite clear. In the version proposed by Philippa Foot, obviously you should steer the train to kill one person rather than five. And in the later version proposed by Judith Jarvis Thompson, obviously you should sacrifice the fat man to save the greater number of people.

These are the right, or moral, choices to make in the given situations. They might not feel easy or pleasant to do, but if that gives us pause, it only means that we lack the strength of our convictions, not that the right choice is hard to determine. It probably also means that we prefer ease and pleasantness to right behavior, or that we expect/demand that moral action should be easy and pleasant.

One can criticize this thinking on the grounds that it prefers the “utilititarian” position, but that is unfair because the thought experiment is set up so that the only possible right choice is utilitarian. We don’t know who the people are on the tracks; the five guys might be child abusers and the one guy might volunteer at soup kitchens in his off-time. We are only given their numbers, without any other substantive information. As such, we can only make a choice based on numbers.

Similiarly, in the case of the fat man, we know nothing about him (other than that he is fat) or the people that might be saved by pushing him onto the tracks. If he were a friend or relative of ours, for example, both our feelings and the character of the moral choice before us might justifiably be changed. I wouldn’t kill my buddy, fat or not, for the sake of five perfect strangers.

Again, when the only information given is numerical, the choice can only be made on the basis of numbers. And the right choice isn’t difficult to determine. It might be difficult to act upon, but that would be because our moral judgments – down to our gut feelings – have been cast into doubt. We don’t trust our guts anymore.

I agree with your fine critical analysis of the Trolley Problem, Charlie.

That said, I think that your arguments make the article’s point even stronger when it comes to the matter of self-driving cars. It would be relatively simple to program such cars to make moral choices on the basis of numbers. But numbers won’t suffice.

As you basically point out, what if the one person your car chooses to kill, in order to spare five other people, happens to be your mother? Morality is ever so much more complex than the Trolley Problem makes it out to be.

This would seem to be why the author, Mr. Wall, says that refining one’s moral intuitions into principles will fail to take into account new stituations. I would argue that moral principles don’t just fail new stituations, they distort morality as such. Morality is concerned with people, not principles.

I’d also like to add the following wrinkles.

Clearly, self-driving cars will never be able to make sound moral decisions. But how consistently do human drivers make sound moral decisions? Self-driving cars would at least be more reliable than we are when it comes to just driving. No more drunk drivers!

Also, suppose that we were to pass laws against the development or use of self-driving cars because they will never be able to make sound moral decisions. Then suppose that some other countries don’t do the same. Are we prepared to be the only country left behind?

Don’t know what to make of Jay’s point about some countries adopting self driving cars and others not doing so. Must be about international competition. But the idea that human drivers make bad moral and technical decisions all the time is great. It’d be awesome to see a study comparing numbers of fatalities caused by human drivers to the same caused by self driving cars. And then we could compare the moral aspects too. Drunk drivers alone probably make us humans less “moral” than the alternative.

Why does the guy have to be fat? Maybe he’s just big. Maybe he’s a power lifter. Why does he have to be fat?

Alex Wall hates fat people.

How many fat men have been killed in thought because of Thompson’s version of the Trolley Problem?

I know this is probably a joke, but it’s still a fascinating snapshot of how much society has changed since the 70s.

When the thought experiment was invented, the example of the fat man was probably seen as totally unobjectionable. Now, it’s practically like saying imagine there are 5 people on the tracks, and 1 gay man next to you. Or, depending on your view of fat people, 1 criminal. The debate around obesity has become so charged, it’s impossible not to see some moral element to the fat man.

Just look at the recent fracas on reddit. First of all, there was a webpage, a popular webpage, devoted to hating fat people. Then this webpage got taken down, because the administrators wanted the site it was part of to become a safe space, and that meant getting rid of any discrimination against the obese. Can you imagine trying to explain any of that to someone from the 70s. Or the fat acceptance movement?

I couldn’t explain any of that to a person from the 70s. But there’s an awful lot that I doubt that a person from the 70s could explain to me… starting with leisure suits.

The guy could be fat or skinny, but if he were wearing a leisure suit, I’d toss him onto the tracks just for commiting a fashion faux pas. I wouldn’t even care if it saved lives or not.

The self driving trolley will have far more information than a human to make these decisions. It will use face recognition to know the people it is driving into, it could use their profiles to decide based on their medical records and how long they have to live, their potential to improve or degrade the world, the amount of life insurance they will leave their survivors, how much their social network cares about them, etc. Big data will ultimately make the right choice. Although probably not as good as a human driver who is drunk

http://www.theatlantic.com/health/archive/2014/10/the-cold-logic-of-drunk-people/381908/

Do you really have that kind of faith in “Big Data”? Could it possibly have an accurate assessment of each person’s potential to improve or degrade the world? How on earth would that be ascertained? How would it even begin to capture any individual human being’s capacity for change?

Saul of Tarsus spent the first part of his life persecuting Christians. Had his “data” been collected at that point, what basis would there be for regarding him as Paul the Apostle, who would later repent for his misdeeds and go on to be one of the most important figures in the formation of Christianity?

What if you were equally fat and standing beside the fat man? Would it still be ethical to push him?

It is an interesting question in terms of the self-driving car, though rather than If/Then logic branches, I imagine the approach would be something fuzzier like machine learning where you teach it with examples (recall the recent google ‘dreaming’ AI images), and it could be refined over time to make the relatively more “correct” choice in most cases, certainly more often correct than humans in a split-second decision.

Anaud raises the horror of relative worth weighting where a busload of degenerates would be sacrificed to save a single Nobel prize winner (or wealthy banker, or the author of the software!). Linked would be the relative worth of the occupants of the car themselves, and so whether they would actually trust the car to do what is in their best interest. On the other hand, it could level the field for pedestrians and cyclists in situations where a human driver would typically act for self-preservation (ie. it would actually ENFORCE the correct trolley choice!).

Practically speaking, when a significant percentage of cars are self driving and the technology has matured, I would assume traffic deaths would plummet dramatically and there will be fewer trolley moments overall since the computer is an expert driver and was not speeding, texting, drunk, angry, sleeping, running a red, having a heart attack, tailgating, etc.

In that case, the most important self-driving trolley problem is not within an individual automobile, but rather the overall moral choice for our society whether we continue to allow human driven cars. If we steer our trolley onto the computer track, then we could save a significant fraction of 1.25 million annual auto deaths worldwide.

Sometimes good judgment can compel us to act illegally. Should a self-driving vehicle get to make that same decision?